Quick Start

To quickly experience MCPMark, we recommend firstly preparing the environment, and then execute the Postgres tasks.

1. Clone MCPMark

2. Setup Environment Variables

To setup the model access in environment variable, edit the .mcp_env file in mcpmark/.

3. Run Quick Example in MCPMark

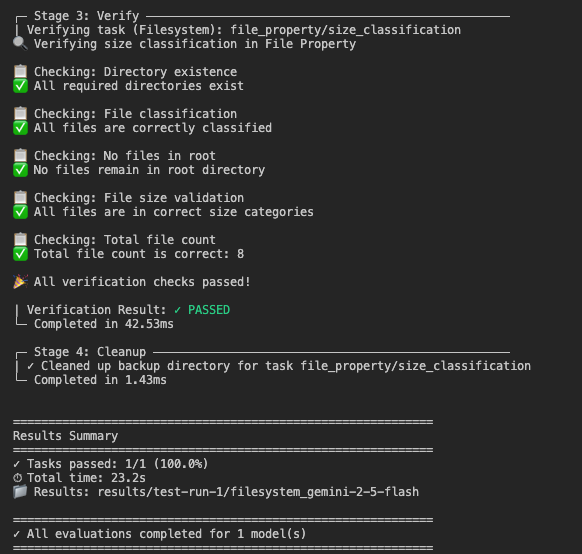

Suppose you are running the employee query task with gemini-2.5-flash, and name your experiment as test-run-1, you can use the following command to test the size_classification task in file_property, which categorizes files by their sizes.

Here is the expected output (the verification may encounter failure due to model choices).

The reuslts are saved under restuls/{exp_name}/{mcp}_{model}/{tasks}, if exp-name is not specified, the default name would be timestamp of the experiment (but specifying the exp-name is useful for resuming experiments).

For other MCP services, please refers to the Installation and Docker Usage Page for detailed instruction.